Deep Learning neural networks will be used to enhance current Sound Source Separation technologies to isolate each instrument in the orchestra.

Music students and professional musicians will benefit from the possibility of practising their instruments with ensembles and orchestras by muting their corresponding instruments, transforming standard recordings into karaoke versions in real time (so-called “minus-one” recordings), and hearing the result as if from the position of that player in the room.

-

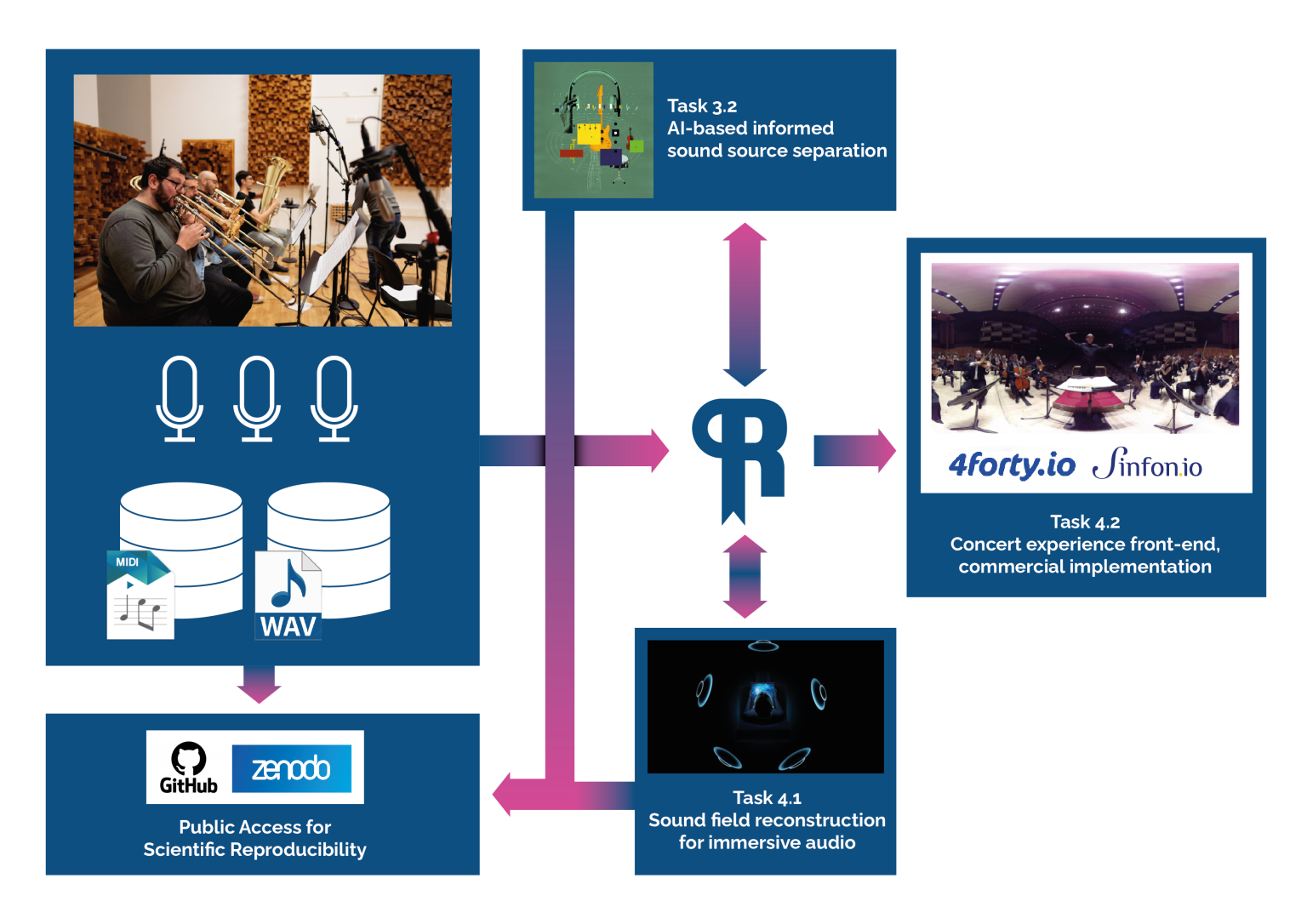

A Sound Sample Library

An immersive audio training set will be recorded of all of the standard instruments of the orchestra to train the AI to recognise the timbre of instruments and their typical localisation on stage. -

Sound Source Separation Tools

Deep Learning solutions will extract the individual parts from multichannel classical recordings, allowing them to be remixed on demand to render the sound field as it would be experienced at any freely chosen spot in the virtual sound stage. -

Minus-One Tools

These technologies will be extended to permit selectively soloing or muting individual instruments, rendering karaoke versions in real time.

about the Sound Source Separation techniques