Sound Source Separation Techniques

Advances in Audio Source Separation Techniques and the REPERTORIUM Project

Algorithmic Techniques

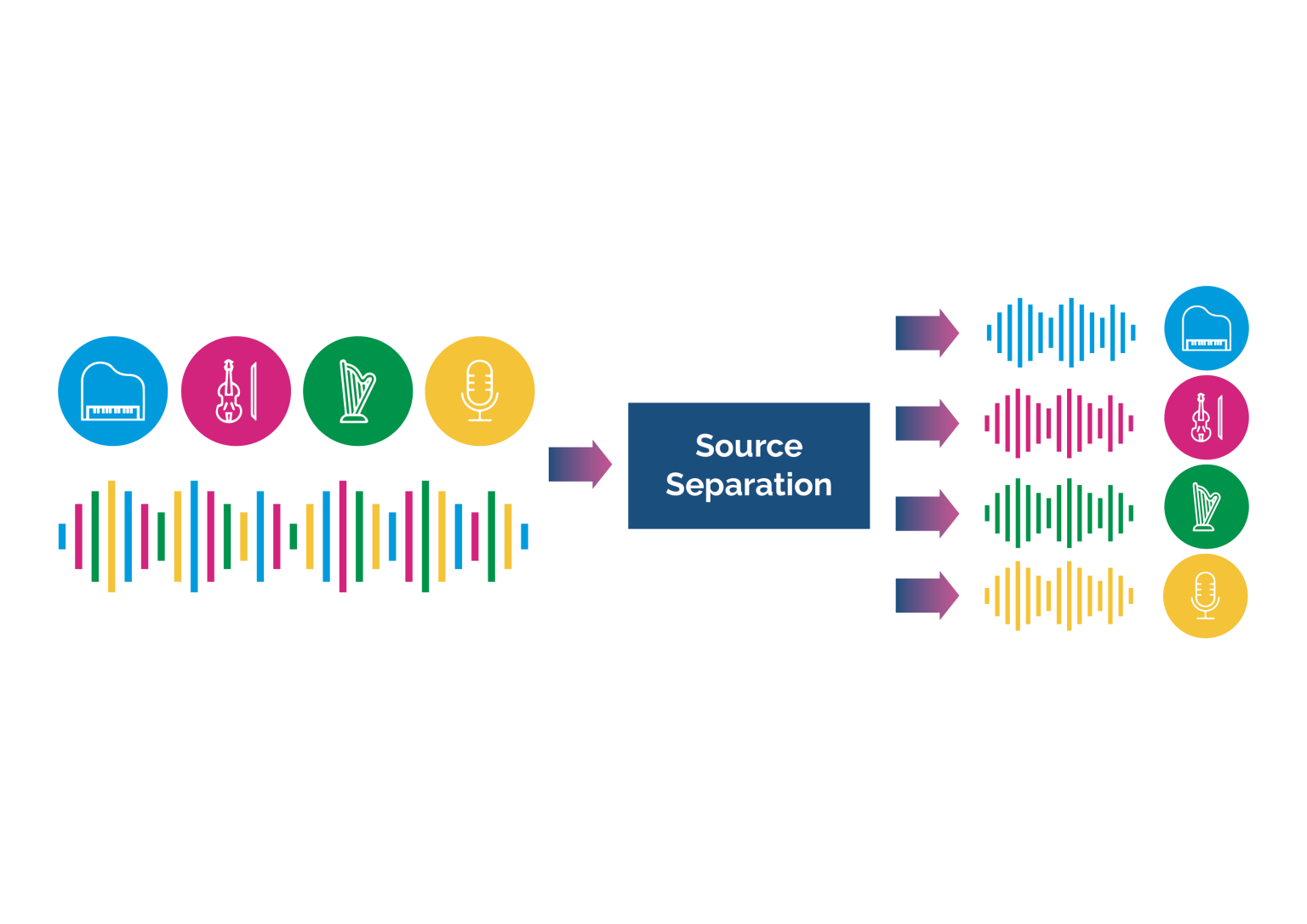

Audio source separation aims to segregate constituent sound sources from an audio signal mix. This task has been one of the most popular research problems in the music information retrieval community. Since most music audio is available in the form of mastered mixes, there are several applications of a system capable of music source separation – e.g. automatic creation of karaoke, acoustic emphasis, music unmixing and remixing, and other applications in music production and education.

Many approaches have been addressed in the last two decades to achieve this separation. A classical approach consists of decomposing a time-frequency representation of the mixture signal using methods such as non-negative matrix factorisation (NMF), independent component analysis (ICA), or probabilistic latent component analysis (PLCA). Among these factorisation techniques, NMF has been widely used for music audio signals, as it allows one to describe the signal as a non-subtractive combination of sound objects (or “atoms”) over time. However, without further information, the quality of the separation using the aforementioned statistical methods is limited. One solution is to exploit the spectro-temporal properties of the sources. For example, spectral harmonicity and temporal continuity can be assumed for several musical instruments, while percussive instruments are characterised by short bursts of broadband energy [1]. Score information can also be applied to limit the time-frequency regions belonging to each source [2-4]. When multichannel recordings are available, spatial information can also be exploited to enhance the separation results [5-8].

Data-Driven Techniques

When training material is available, it is possible to learn the spectro-temporal patterns and the methods are referred to as supervised. In this way, several signal decomposition methods have been presented which provide superior results to the blind scenario. Recently, deep neural networks (DNN) have been extensively used for this purpose. The existing methods mostly use DNN with either the spectrogram as the input signal representation [9,10] or directly use the time-domain representation [11,12] to train such a system. Convolutional neural networks (CNN) [10,13] and long short-term memory (LSTM) [14,15] networks are the popular choices for DNN model architectures adapted for music source separation. Some of the latest top-performing music source separation models are Open-Unmix [15], MMDenseLSTM [16], Meta TasNet [17], Band-Spit RNN [18], and Demucs [19].

However, in the case of classical music, the lack of a multi-instrumental, well-annotated training set limits the performance of the AI-based methods. REPERTORIUM will contribute to pushing the research on classical music source separation by delivering a dataset of individually recorded instruments together with novel source separation approaches exploiting both score and spatial information from the recordings.

References

[1] F. Canadas-Quesada, D. Fitzgerald, P. Vera-Candeas and N. Ruiz-Reyes, “Harmonic-percussive sound separation using rhythmic information from non-negative matrix factorization in single-channel music recordings”, Proc. 20th Int. Conf. Digit. Audio Effects (DAFx), pp. 276-282, 2017.

[2] R. Hennequin, B. David and R. Badeau, “Score informed audio source separation using a parametric model of non-negative spectrogram,” 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 2011, pp. 45-48, doi: 10.1109/ICASSP.2011.5946324.

[3] S. Ewert and M. Müller, “Using score-informed constraints for NMF-based source separation,” 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 2012, pp. 129-132, doi: 10.1109/ICASSP.2012.6287834.

[4] Munoz-Montoro, A.J., Carabias-Orti, J.J., Vera-Candeas, P. et al. Online/offline score informed music signal decomposition: application to minus one. J AUDIO SPEECH MUSIC PROC. 2019, 23 (2019). https://doi.org/10.1186/s13636-019-0168-6

[5] Y. Mitsufuji, A. Roebel, in 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Sound source separation based on non-negative tensor factorization incorporating spatial cue as prior knowledge (IEEEVancouver, 2013), pp. 71–75. https://doi.org/10.1109/ICASSP.2013.6637611.

[6] J. Nikunen and T. Virtanen, “Direction of arrival based spatial covariance model for blind sound source separation”, IEEE/ACM Trans. Audio Speech Language Process., vol. 22, no. 3, pp. 727-739, Mar. 2014.

[7] J. J. Carabias-Orti, J. Nikunen, T. Virtanen and P. Vera-Candeas, “Multichannel blind sound source separation using spatial covariance model with level and time differences and nonnegative matrix factorization”, IEEE/ACM Trans. Audio Speech Language Process., vol. 26, no. 9, pp. 1512-1527, Sep. 2018.

[8] K. Sekiguchi, Y. Bando, A. A. Nugraha, K. Yoshii and T. Kawahara, “Fast multichannel nonnegative matrix factorization with directivity-aware jointly-diagonalizable spatial covariance matrices for blind source separation”, IEEE/ACM Trans. Audio Speech Language Process., vol. 28, pp. 2610-2625, 2020.

[9] A. A. Nugraha, A. Liutkus and E. Vincent, “Multichannel audio source separation with deep neural networks”, IEEE/ACM Trans. Audio Speech Language Process., vol. 24, no. 9, pp. 1652-1664, Sep. 2016.

[10] E. M. Grais, G. Roma, A. J. Simpson and M. D. Plumbley, “Combining mask estimates for single channel audio source separation using deep neural networks”, Proc. Annu. Conf. Int. Speech Commun. Assoc., pp. 3339-3343, 2016.

[11] D. Stoller, S. Ewert and S. Dixon, “Wave-U-Net: A multi-scale neural network for end-to-end audio source separation”, Proc. 19th Int. Soc. Music Inf. Retr. Conf. (ISMIR), pp. 334-340, 2018.

[12] A. Défossez, F. Bach, N. Usunier and L. Bottou, “Music source separation in the waveform domain”, arXiv:1911.13254, 2019.

[13] S. Park, T. Kim, K. Lee and N. Kwak, “Music source separation using stacked hourglass networks”, Proc. 19th Int. Soc. Music Inf. Retr. Conf. (ISMIR), pp. 289-296, 2018

[14] P. Seetharaman, G. Wichern, S. Venkataramani and J. L. Roux, “Class-conditional embeddings for music source separation”, Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), pp. 301-305, May 2019.

[15] F. R. Stöter, A. Liutkus and N. Ito, “The 2018 signal separation evaluation campaign” in Latent Variable Analysis and Signal Separation, Cham, Switzerland: Springer, vol. 10891, pp. 293-305, 2018.

[16] N. Takahashi, N. Goswami and Y. Mitsufuji, “MMDenseLSTM: An efficient combination of convolutional and recurrent neural networks for audio source separation”, Proc. 16th Int. Workshop Acoustic Signal Enhancement (IWAENC), pp. 106-110, Sep. 2018.

[17] D. Samuel, A. Ganeshan and J. Naradowsky, “Meta-learning extractors for music source separation”, Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), pp. 816-820, May 2020.

[18] Y. Luo and J. Yu, “Music Source Separation With Band-Split RNN,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 31, pp. 1893-1901, 2023

[19] S. Rouard, F. Massa and A. Défossez, “Hybrid Transformers for Music Source Separation,” 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 2023, pp. 1-5